Transforming Data Management for Managed Service Providers and Agencies Using GenAI

In the dynamic world of Agencies and Managed Service Providers (MSPs), staying ahead often involves grappling with the complexities of data migration and cleanup. These challenges are not new, but with the advent of Generative AI (GenAI), there's a transformative solution on the horizon.

In the dynamic world of Agencies and Managed Service Providers (MSPs), staying ahead often involves grappling with the complexities of data migration and cleanup. These challenges are not new, but with the advent of Generative AI (GenAI), there's a transformative solution on the horizon. In this blog, we'll explore the common data migration and cleanup issues faced by professionals in these fields and delve into how GenAI, and Querri in particular, is emerging as a game-changer.

The Messy Reality of Data

The Daily Grind: Navigating Data Mapping Challenges

Agencies and MSPs routinely receive substantial volumes of data from clients via FTP or other means. They must clean up and map this data to their business processes to serve their clients' customers. The inherent messiness of data, with newly added fields, deleted fields, field type changes, inconsistent formatting, and the absence of standardized practices, poses a significant hurdle to cleaning up and migrating the data to their internal systems.

While working at ServiceSource, a leading MSP specializing in renewal sales for industry giants such as Dell and Cisco, I grappled with the persistent challenge of downstream data cleanup. Every month, we received millions of records related to expiring service contracts for the next three months. Unfortunately, the dataset consistently exhibited poor quality, with frequently changing or differently formatted fields. This necessitated extensive efforts from the data engineering team to rectify existing scripts through trial and error. Running these scripts over several days was the norm to obtain the final output required for generating quotes.

Tackling Data Quality Issues Related to Customer Contact Information

One major challenge faced by these agencies involves the quality of contact data necessary for reaching out to clients' customers for sales or service purposes. Customers frequently undergo changes such as going out of business, merging with others, or relocating their headquarters, making it challenging to maintain dynamic contact information.

Our objective at ServiceSource was to generate quotes with accurate contact details, ensuring streamlined sales pipeline management and preventing futile efforts on invalid contacts for customers. Imagine a scenario where obtaining the correct contact information requires a dedicated team of business analysts conducting extensive research, including Google and LinkedIn searches and direct calls to various individuals, to verify accuracy.

Out of the millions of expiring service contracts, the team could only prioritize the top 10% deemed high value. Unfortunately, this meant that 90% of customers never received a quote upon contract expiration due to the overwhelming volume of data with incorrect contact information. Despite this imperfect solution, clients accepted the reality, acknowledging the absence of a viable alternative to maintain up-to-date customer contact information on their end.

The GenAI Solution: Transforming Cleanup Efforts

In this landscape of perpetual downstream cleanup struggles, GenAI enters as a revolutionary solution. GenAI promises to break free from the shackles of manual interventions and script rewrites, offering a transformative approach to data cleanup that is not only efficient but also adaptive to evolving data challenges.

Querri uses natural language as input to transform data, eliminating the need for business analysts or data scientists to map fields in advance. Field mapping occurs dynamically during processing. Once data scientists or business analysts transform data using Natural Language Processing (NLP) within Querri — changing data formats, removing columns, splitting columns, eliminating blanks, categorizing data into buckets, and validating the output — they can reuse the same commands for future files. All commands used on the data are stored in the flow history. This data pipeline can be automated and scheduled, allowing cleanup to occur automatically for new data files flowing through the system.

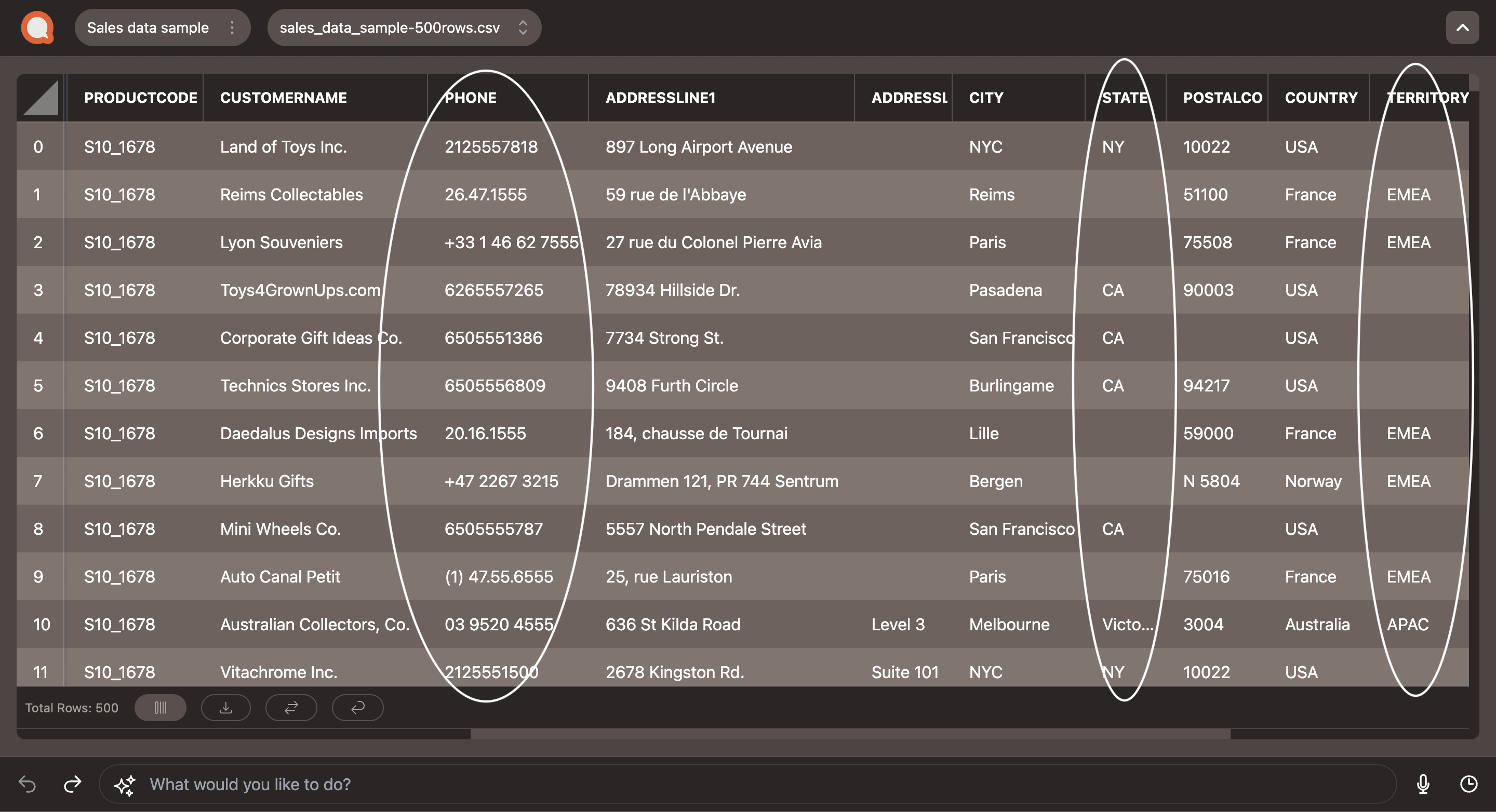

Now, let's examine a sample dataset I used to showcase the power of Querri for data cleanup and augmentation. I trimmed the dataset to 500 rows. You'll observe that the PHONE numbers are not well-formatted, with most missing country code information. The POSTAL CODE and TERRITORY columns also have missing data.

Now, imagine populating the missing data using VLOOKUP in an Excel spreadsheet. You'll need multiple mapping sheets to get this done. Besides, it is very complex to populate missing postal codes since cities can have multiple postal codes. Also, imagine populating the phone country code for all the PHONE numbers.

All of this can be accomplished using simple commands in Querri with natural language. You don't have to think too hard or map any fields.

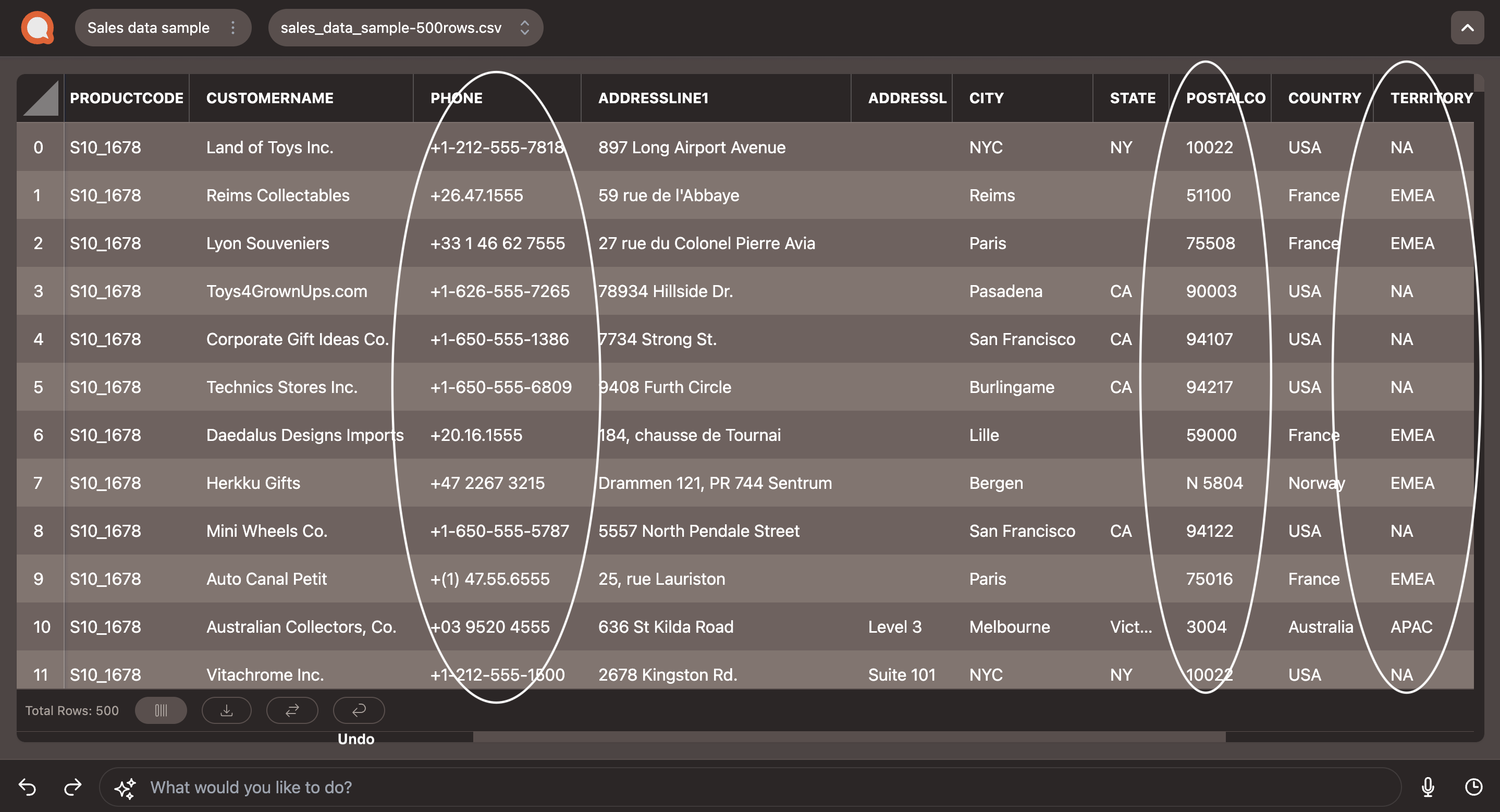

Here are the commands I provided to clean up the data:

- Populate the TERRITORY based on COUNTRY

- Populate POSTALCODE based on ADDRESSLINE1, CITY, STATE, and COUNTRY where empty

- This created a new PostalCode column instead of updating the existing POSTALCODE column, so I had to merge the two columns in the next step.

- Merge POSTALCODE and PostalCode and if there is conflict keep value from POSTALCODE

- Reformat PHONE number to readable format and add country code where COUNTRY is USA

- Add country code to PHONE where country code is missing and COUNTRY is not USA

I executed each of the commands one at a time. This did require a few trial-and-error attempts to get the final data to look the way I wanted. It is effortless to undo and redo commands within Querri, if a certain command doesn't behave the way you intend. Once you execute a command, check the results, and if they are not what you want, you can hit the undo button and then reframe your command to be more specific and try again. Sometimes, you will need to split up your commands. However, once you have the final set of commands, you can reuse them every time a new file comes in.

Now that you have witnessed the power of this GenAI data tool, you have the opportunity to play around with it. You can even try out the sample data set linked above and use the commands listed in the blog to see how it works. I would love to hear about your experience once you get a chance to experiment with this tool. Request here for early access.